Online Signature

Easier, Quicker, Safer eSignature Solution for SMBs and Professionals

Quickly and effortlessly sign, send, track, and collect electronic signatures with CocoSign’s trusted and legally binding eSign software.

- No credit card required

- 14 days free

Sign Effortlessly

All your documents can now be signed online easily, quickly, and securely, whether by yourself, by others, virtually, or face-to-face on a single device.

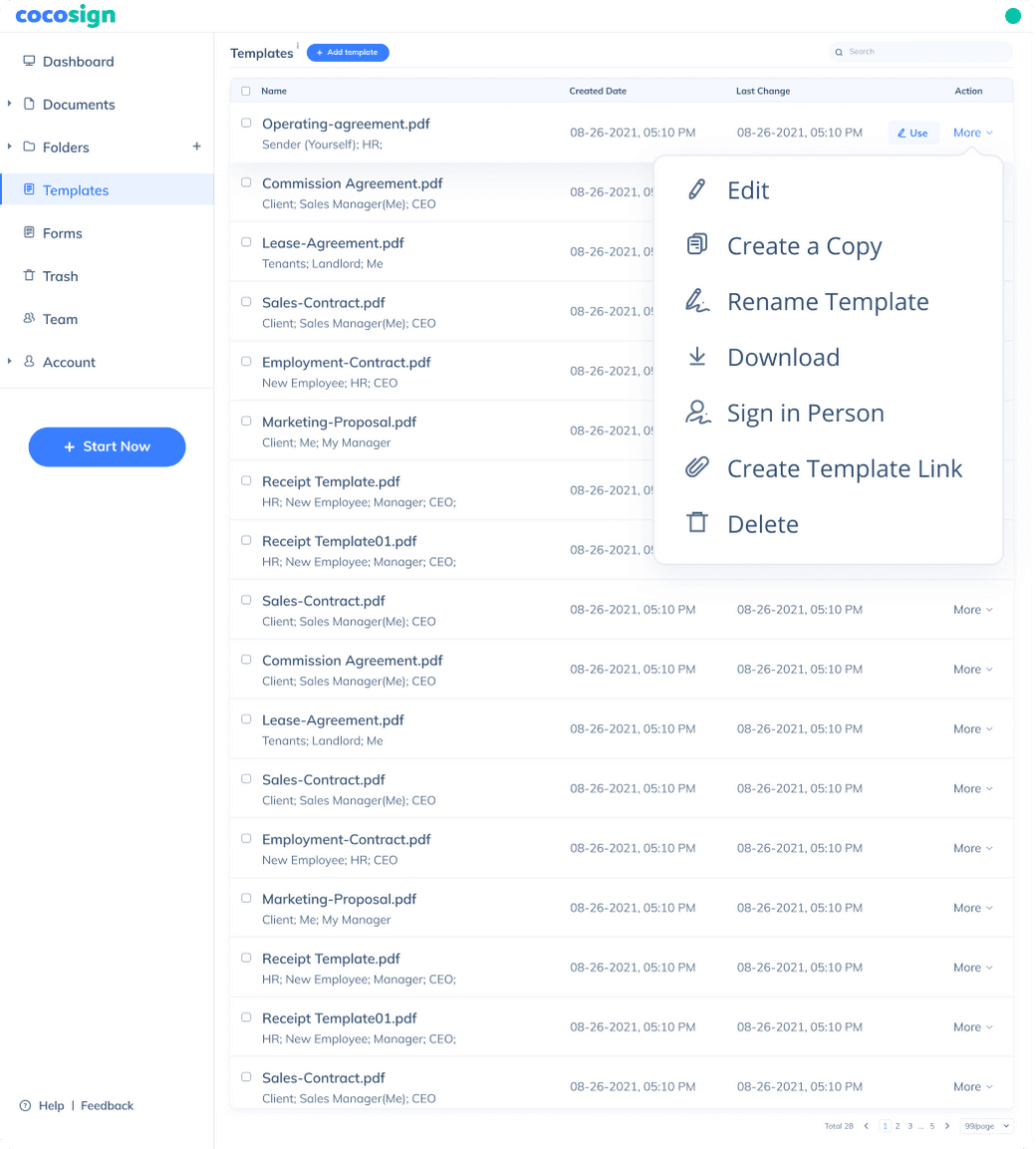

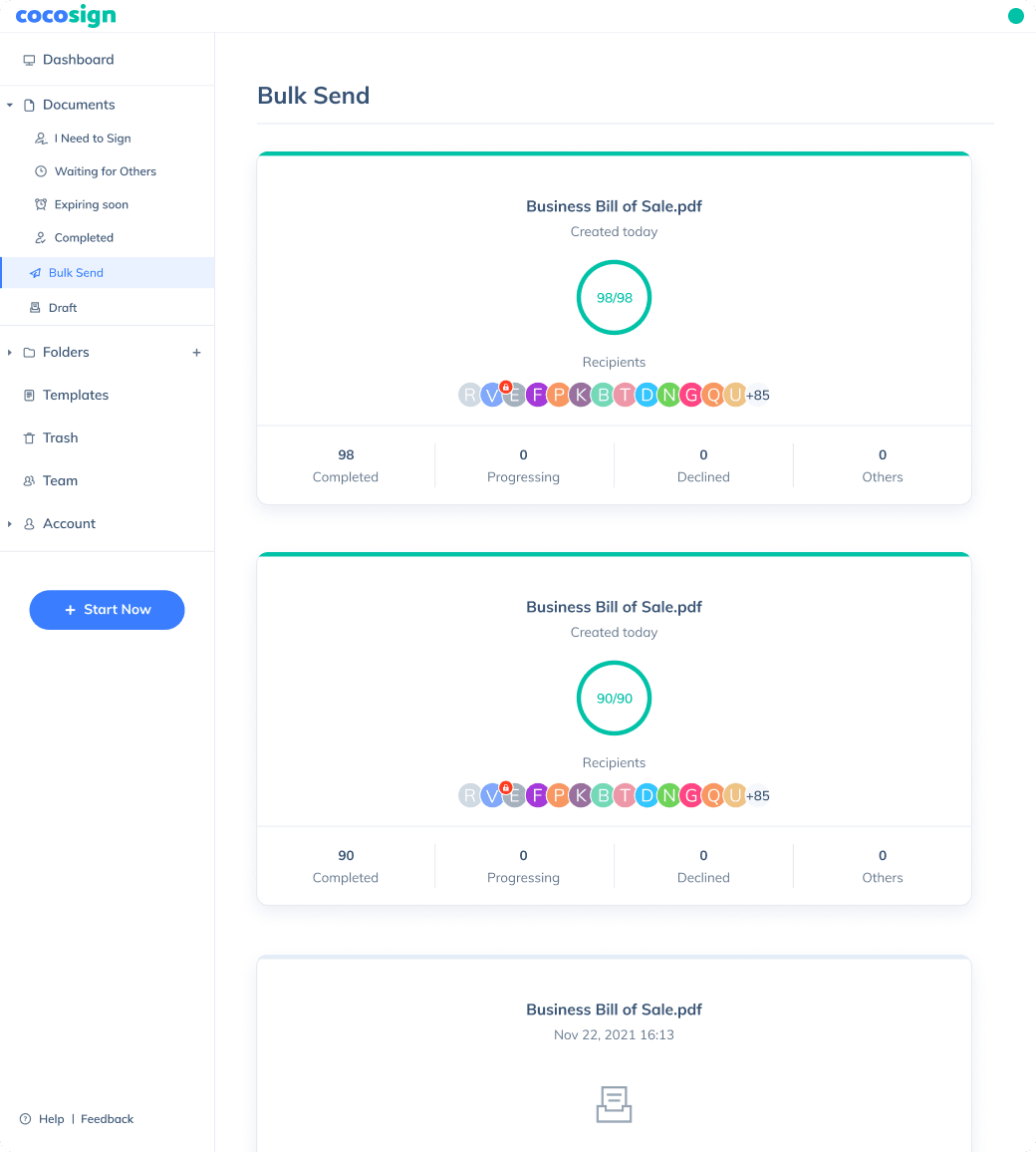

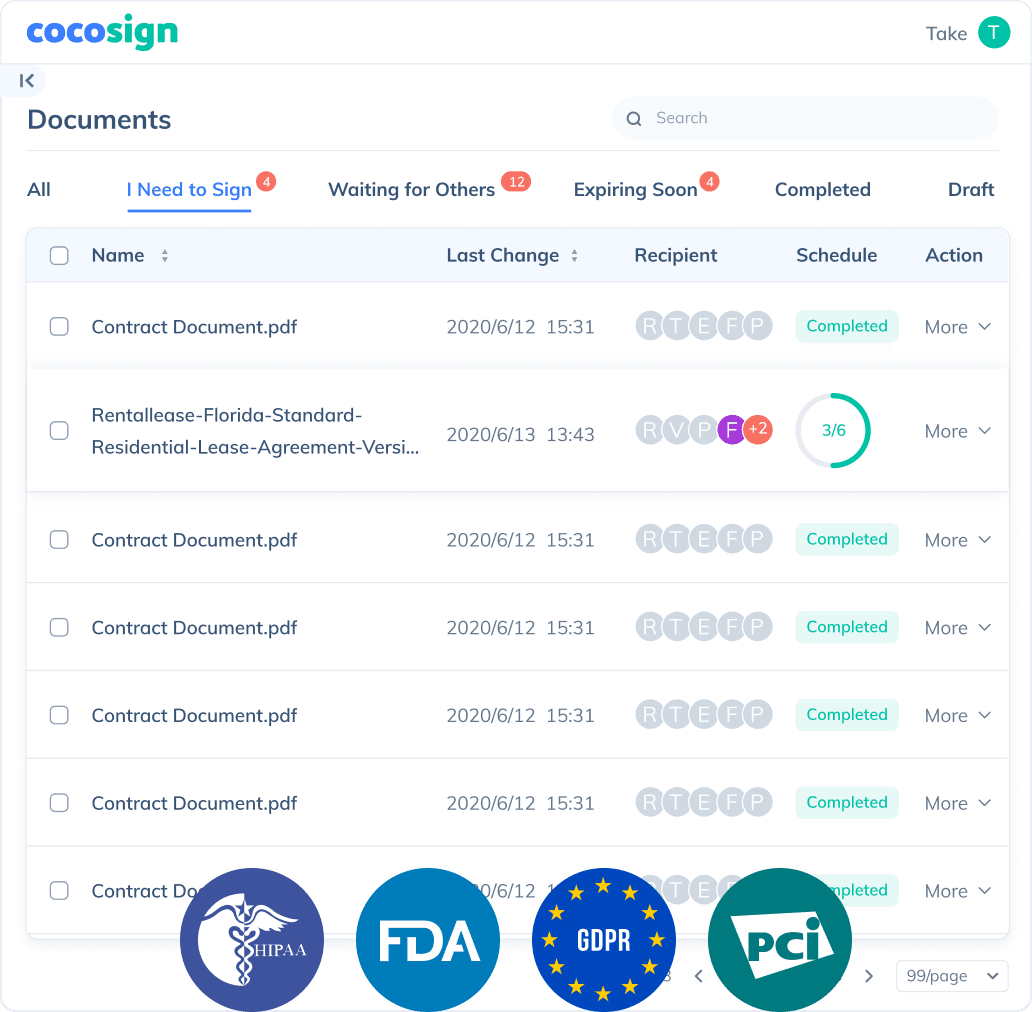

Send documents for signature

Send, track and manage your signature requests for multiple recipients effortlessly.

Learn more about e-signaturesSign Efficiently

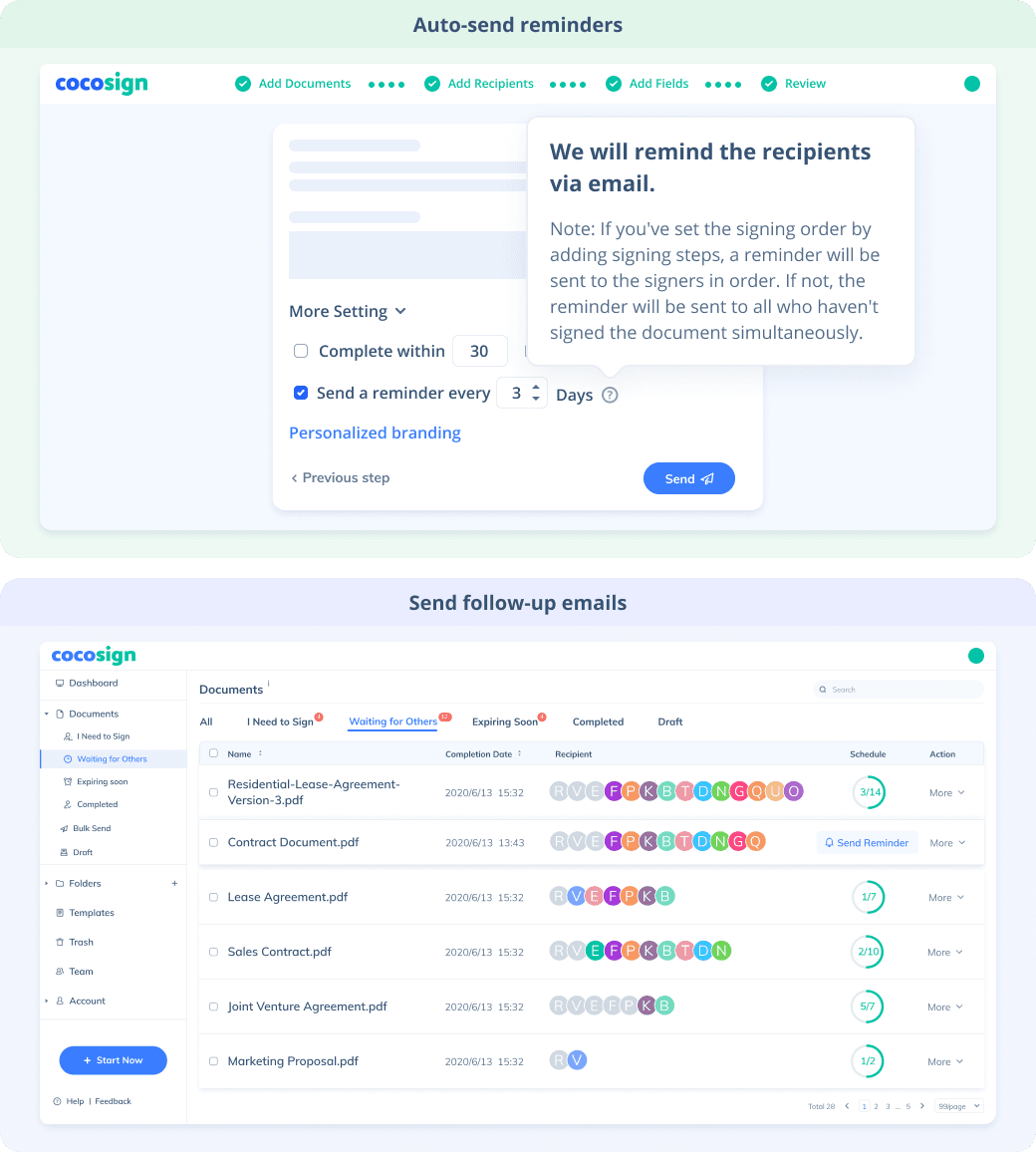

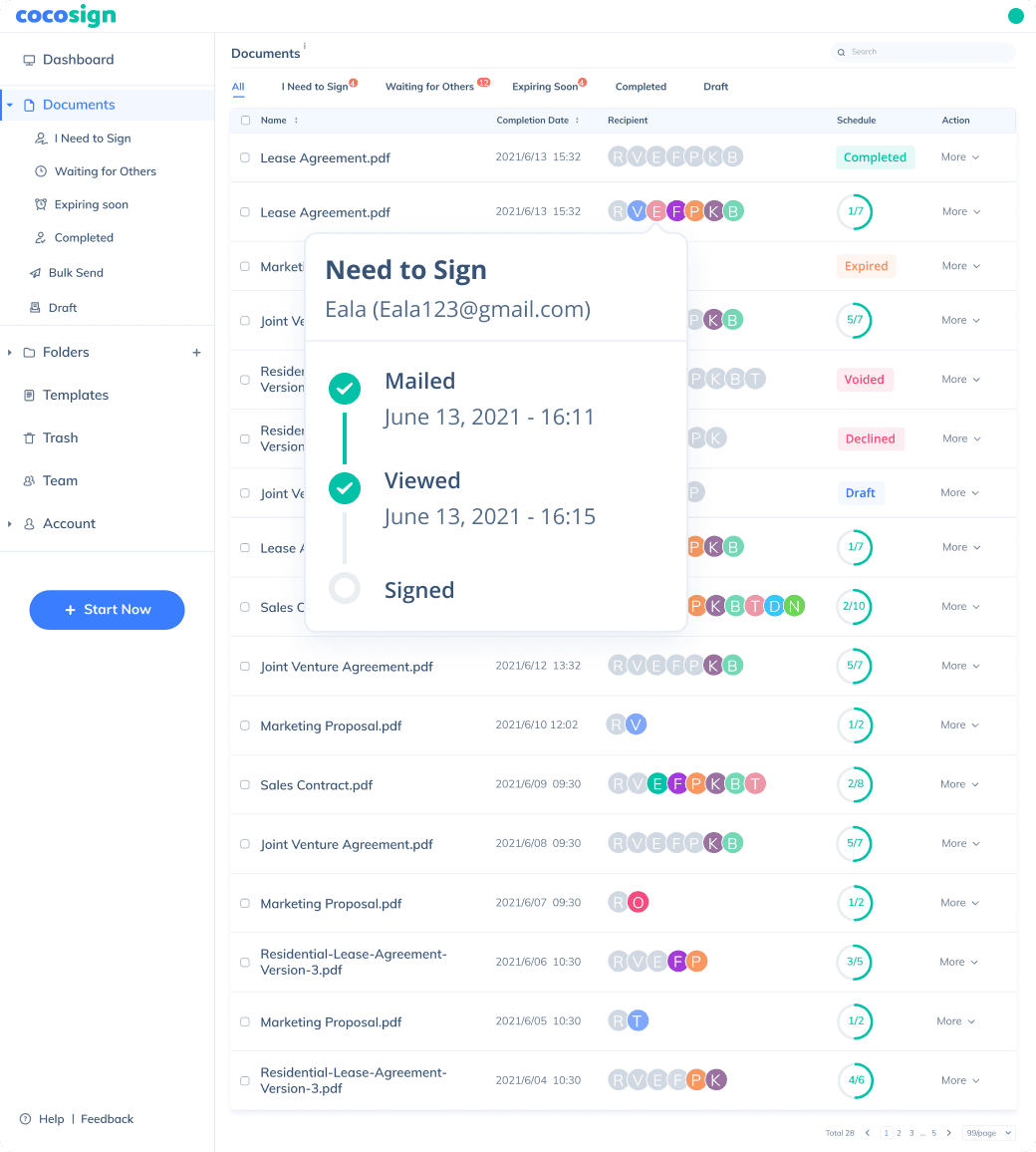

No more chasing down on your signers endlessly. CocoSign will track all signing progress automatically and remind signers to finish signing punctually.

Sign Securely

Worryfree from signature forgery, fraud and data leaks, with CocoSign’s reliable and legally valid eSignature service.

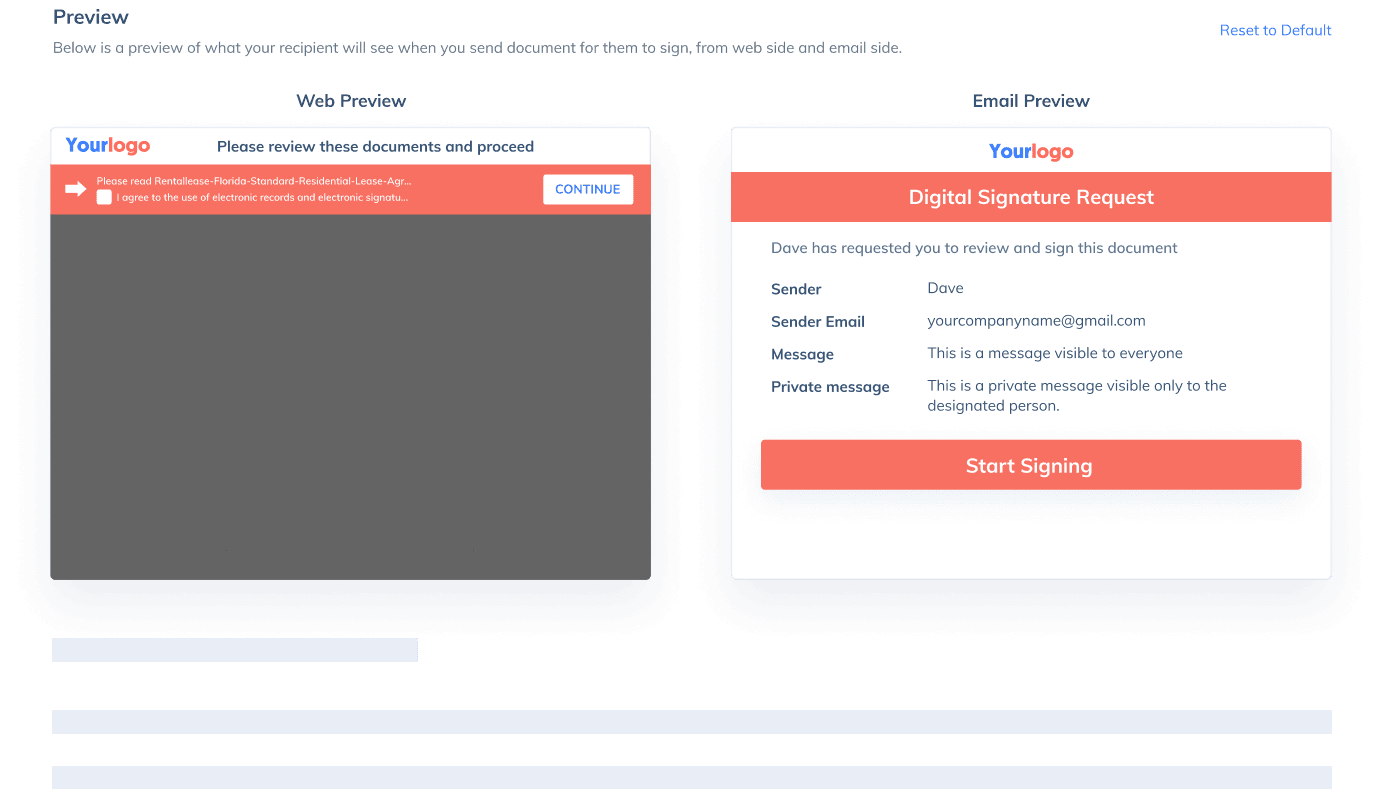

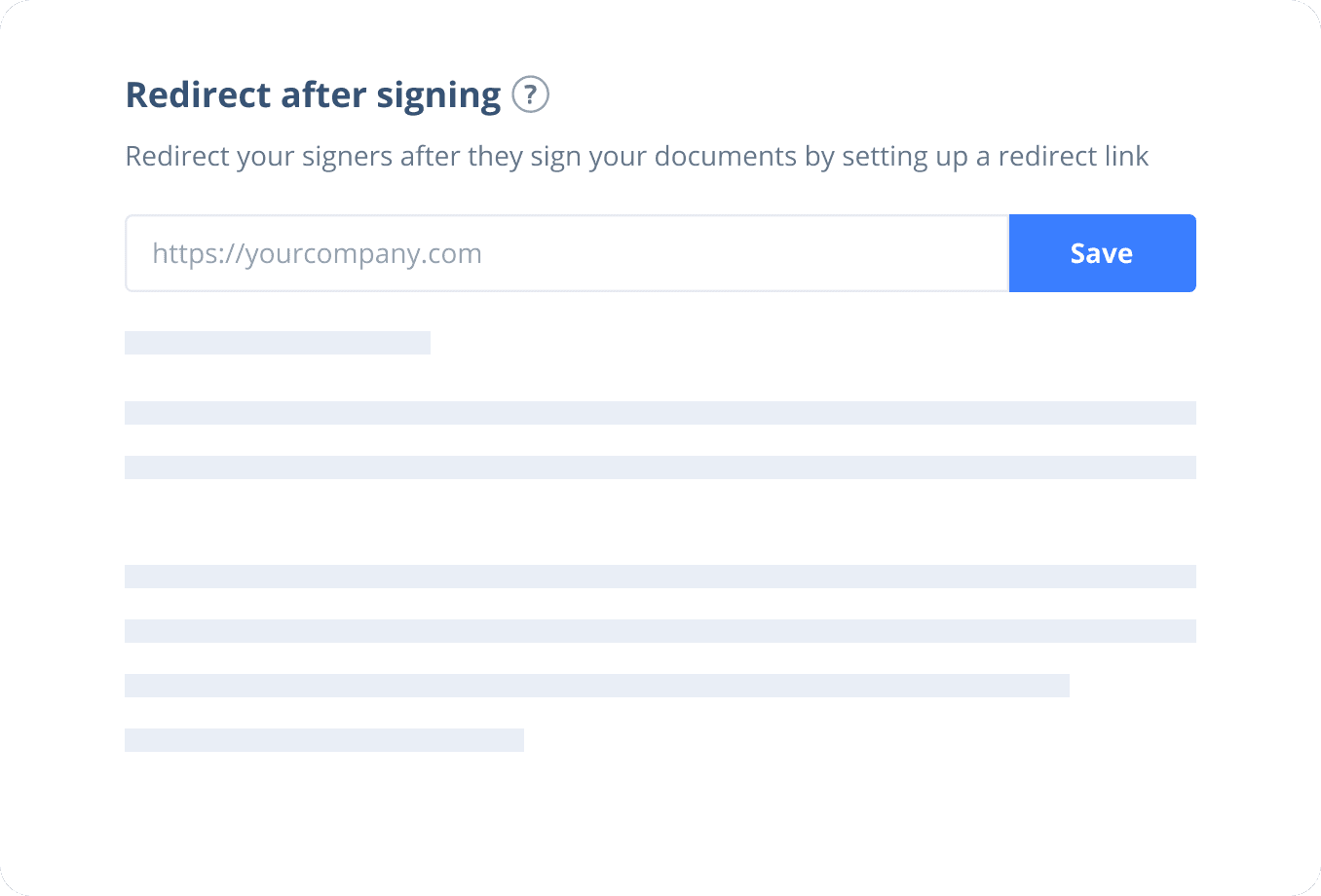

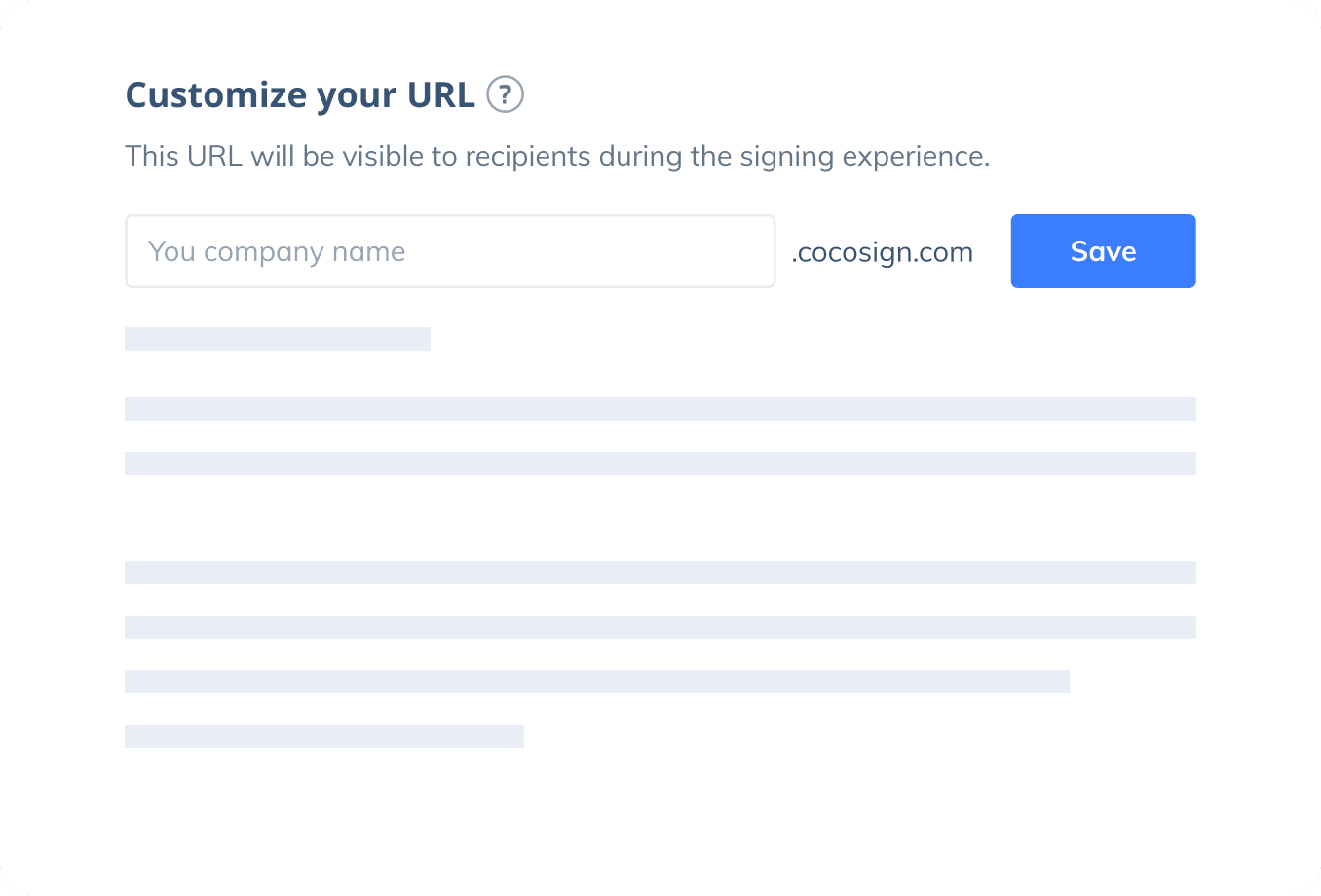

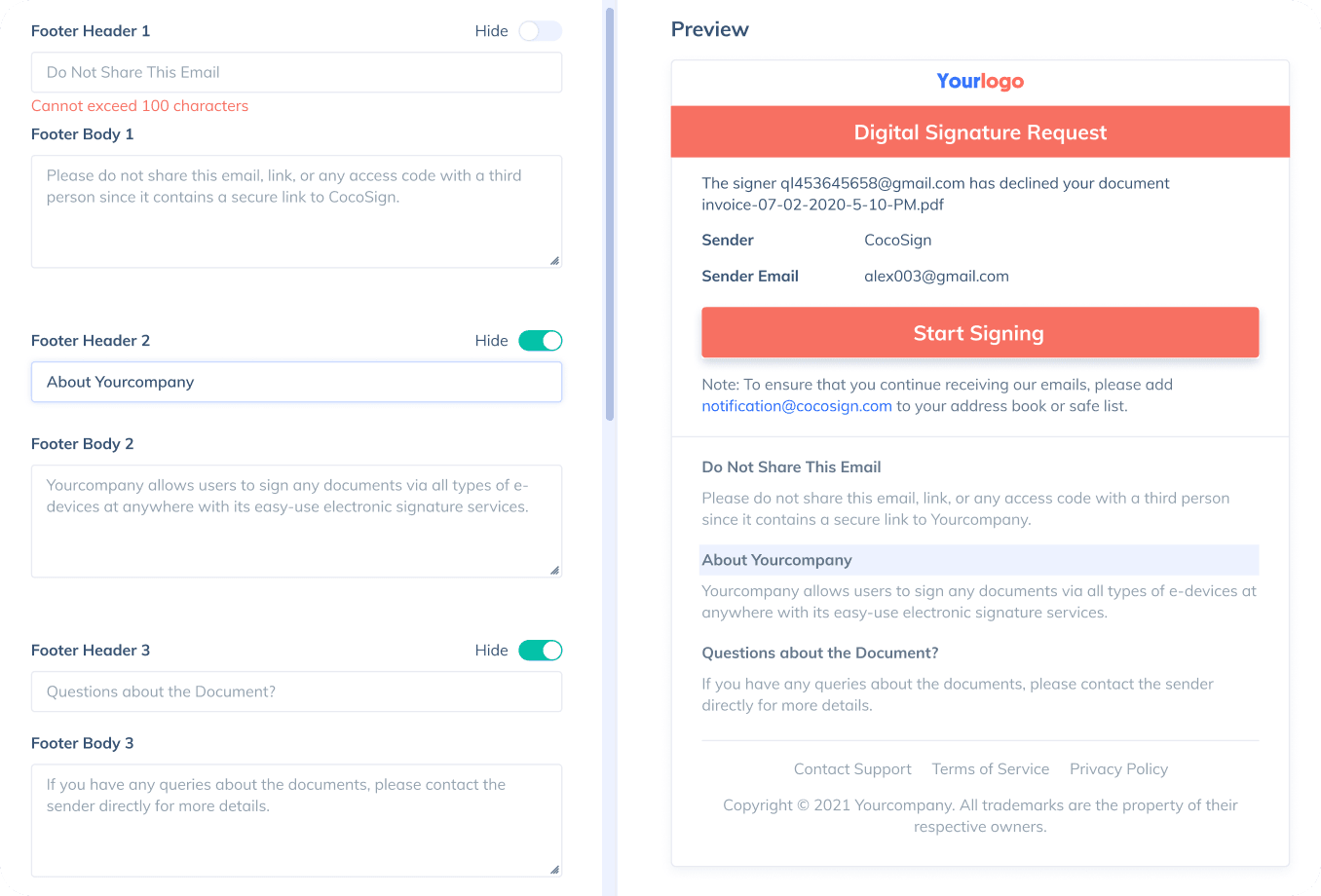

Sign with Your Brand

Fully reflect your brand instead of CocoSign’s when you send out signature requests to your signers while enjoying free publicities.

Award-winning eSignature solution

Digitally sign documents yourself and email the signed copies to others, get documents signed on the spot, or send documents to request signatures.

FAQ

What is an electronic signature?

Also known as eSignature, an electronic signature is a digital form of signatures to convey consent or approval on online forms such as contracts and agreements, as opposed to paper signatures. It’s usually legally binding, highly encrypted, and strictly audited.

Is CocoSign a free electronic signature service?

Yes. CocoSign is a leading eSign platform providing legal, secure and free electronic signature services. You can sign documents yourself or send for others to sign for free on CocoSign with no credit cards required, no setup fees, and also no time limits. You can sign unlimited documents and download 3 signed ones, as a free user.

Are CocoSign’s e-signatures legally valid?

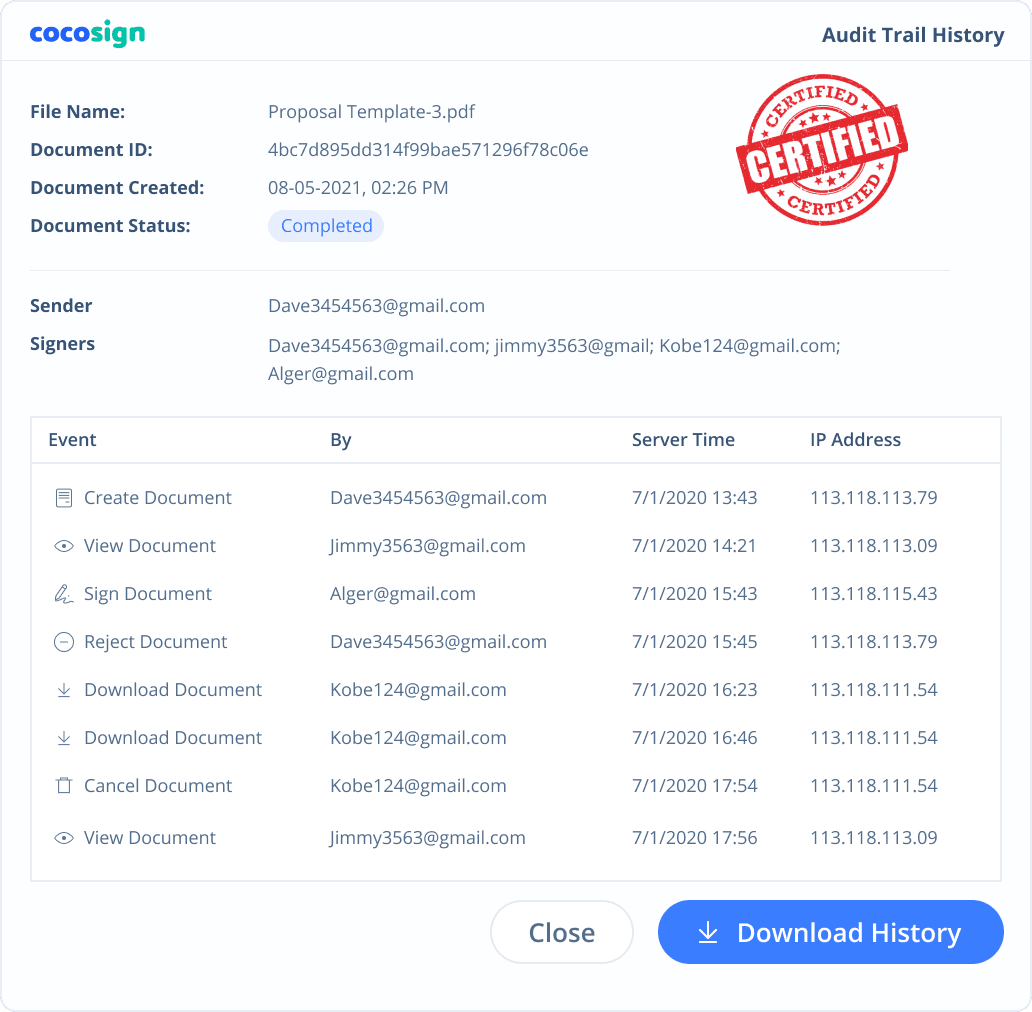

Yes. CocoSign’s eSign complies with various signature laws and security regulations. Its eSignatures are also HIPAA, PHIPA compliant. CocoSign also provides audit trail history for every signing activity with comprehensive information such as the signers’ IP addresses, email addresses, and fingerprints. All these make sure of the validity of eSignatures powered by CocoSign. You can even use them as court evidence if needed.

Are e-signatures secure?

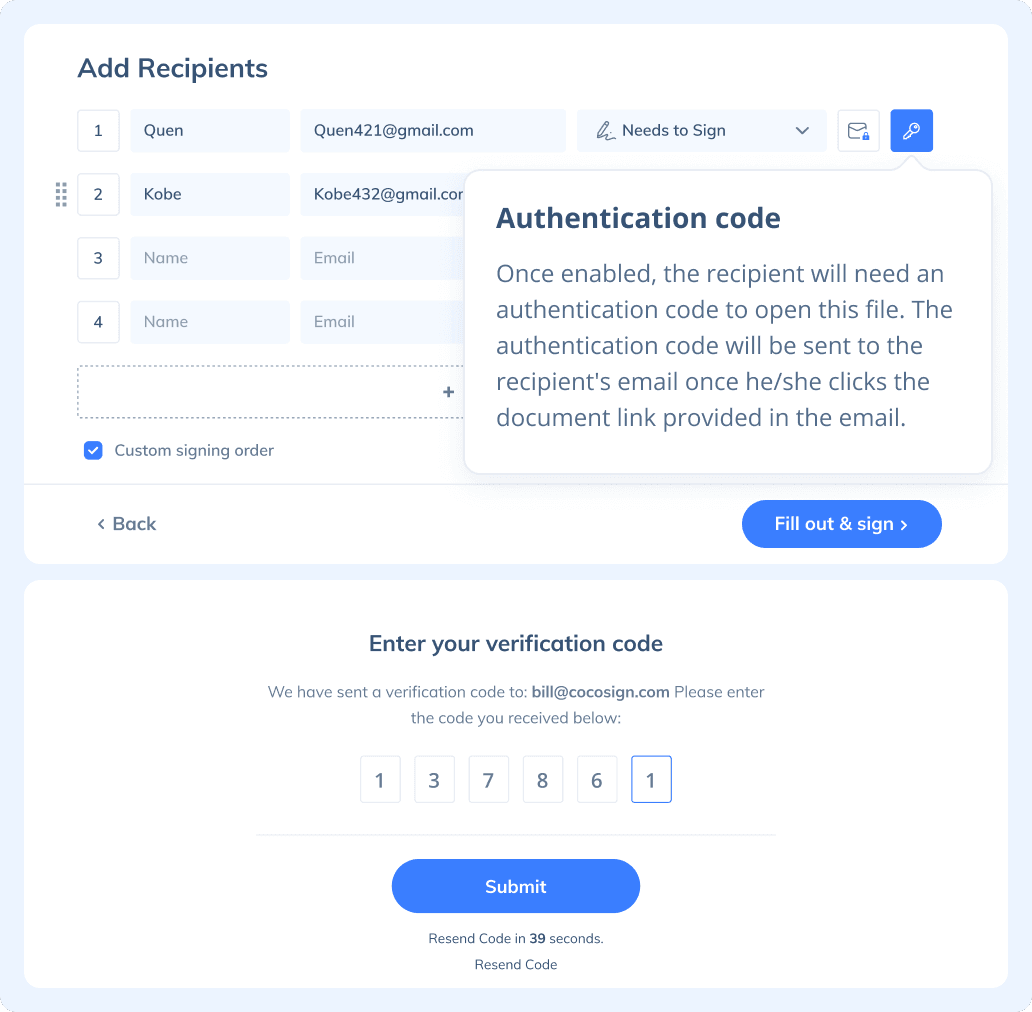

Yes. CocoSign prioritizes eSignature security over anything else. From identity authentication to data transmission and storage, to auditing, CocoSign adopts bank-level encryption measures to ensure the maximum security of all your signed data, throughout the entire signing journey.

How do I create an electronic signature?

Creating an electronic signature with CocoSign is super easy. Simply type or draw your name on CocoSign’s signature generator page, and you get to personalize your own eSignature. Or you can upload your ready-made signature onto CocoSign’s platform.

What is digital signature?

Digital signature is a unique type of electronic signature, usually with a much higher level of security than common esignatures. It requires signers to authenticate their identities with a certificate-based digital ID. Compliant with the strictest legal regulations, it can be used for signing transactions of high risks, sometimes with the capacity to rival handwritten signatures due to its strong immunity against forgery and theft.

Is a digital signature the same as an electronic signature?

There are different types of electronic signatures with various levels of evidential legality as well as many other factors.The digital signature is one of them. Compared to common eSignatures which adopt regular authentication methods for signer identity verification, digital signatures employ stricter certificate-based digital IDs for the same purpose.

How to Electronically Sign a PDF?

eSigning a PDF with CocoSign is easy. Here is how:

- Step 1: Log in CocoSign with your Google account or register a new CocoSign account.

- Step 2: Choose between Send for Signatures and Sign Yourself.

If you need to sign a PDF yourself, simply upload the document and drag the necessary fields from the toolbar on the right and finish signing. Hit the button Download after you complete signing.

If you need to send a document out for others to sign, select Send for Signatures in the dashboard, upload the file, add recipients and then add recipients before sending it out. CocoSign will then help you follow up on signers and update you once anyone finishes signing. At last, a final signed PDF copy will be sent to your email for downloading.

Easier, Quicker, Safer eSignature Solution for SMBs and Professionals

- No credit card required

- 14 days free